Trump’s Executive Order on AI Might Be a Bridge Too Far

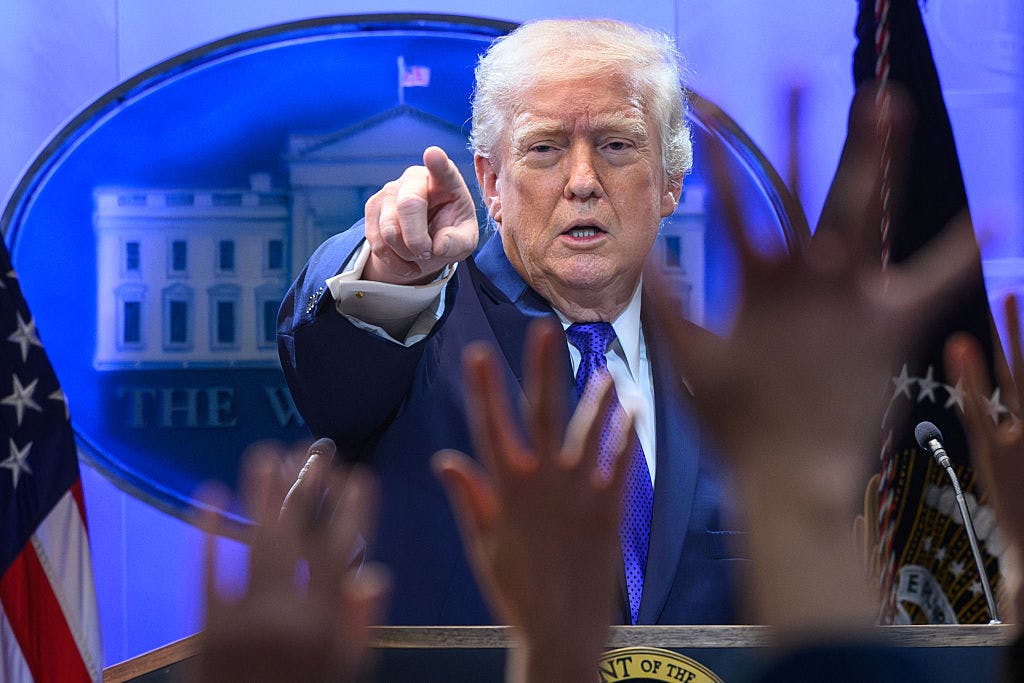

President Donald Trump issued an executive order Dec. 11, titled “Ensuring a National Policy Framework for Artificial Intelligence.” Its broadly stated goal is “to sustain and enhance the United States’ global AI dominance through a minimally burdensome national policy framework.”

Live Your Best Retirement

Fun • Funds • Fitness • Freedom

With more details to come, we hope Trump’s approach properly respects federalism established in the Constitution as a critical safeguard for our liberty.

First, a little background on this fast-moving area.

In October 2023, President Joe Biden issued Executive Order 14110, “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.”

Spanning 36 pages in the Federal Register, EO 14110 outlined eight “guiding principles and priorities” used to govern the development and use of AI.

Not surprisingly, the list included “advancing equity and civil rights,” with vague references to “existing inequities” and “engagement with affected communities,” all signaling Biden’s intention for AI to be used to drive an ideological agenda.

A few days after taking office for the second time, Trump issued Executive Order 14179, revoking the Biden EO and asserting the goal “to sustain and enhance America’s global AI dominance.” Trump’s new order, however, is aimed at minimizing any obstacle to this goal that might come from state efforts to regulate AI.

To this end, the order directs the Secretary of Commerce, within 90 days, to publish an evaluation of existing AI-related state laws identifying those that conflict with the goal of minimally burdensome national AI policy.

Trump also created an AI litigation task force “whose sole responsibility shall be to challenge State AI laws inconsistent with [this] policy.” The devil, they say, is in the details and the scope of the task force’s evaluation and potential litigation will either respect or reject federalism.

The order itself refers to a few categories of state AI-related efforts, which are sure to receive close scrutiny.

The first category includes potentially unlawful by “unconstitutionally regulat[ing] interstate commerce.” The Constitution gives Congress the power to “regulate Commerce…among the several states” and Commerce Clause cases typically involve whether something the federal government has done has exceeded its power.

In United States v. Lopez, for example, the Supreme Court in 1995 said Congress went too far with the Gun-Free School Zones Act, which banned possession of a gun within 1,000 feet of a local school.

Five years later, in United States v. Morrison, the Court came to the same conclusion regarding a provision of the Violence Against Women Act that attempted to turn local crimes of violence into federal civil lawsuits. In NFIB v. Sebelius, a Court majority said the Commerce Clause doesn’t give Congress authority to require everyone to purchase health insurance.

In the case of AI, however, Congress hasn’t done anything.

Instead, the order appears to be relying on the so-called “dormant Commerce Clause” theory to target state laws that improperly attempt to regulate interstate commerce.

As the Supreme Court explained in 2023 in National Pork Producers Council v. Ross, the Commerce Clause has a “negative command,” which prohibits states from enforcing some state economic regulations “even when Congress has failed to legislate on the subject.”

Once again, though, the impact of this strategy on federalism depends on how broad a brush the administration, including litigation initiated by the task force, intends to use.

Its options might be limited by Supreme Court decisions such as National Pork Producers. There, the Court explained that the “very core” of its dormant Commerce Clause jurisprudence involves laws that discriminate against out-of-state businesses to benefit in state businesses.

This principle does not, however, describe state AI-related laws, which apply equally to out-of-state and in-state residents and entities.

The second category described in the order are state laws which, like the Biden EO would have done, “require AI models to alter their truthful outputs, or that may compel AI developers or deployers to disclose or report information in a manner that would violate the First Amendment or any other provision of the Constitution.”

These first two categories of potentially problematic state laws appear to be the kind that might be challenged in court by the litigation task force.

The third category is more general.

The order refers to “onerous and excessive laws … that threaten to stymie innovation” or those that are “inconsistent” with the goal of setting national AI policy.

The Supreme Court’s National Pork Producers decision is also relevant in this category.

The Court rejected the broad argument claiming state laws violate the dormant Commerce Clause solely by imposing new costs on commerce both in and outside the state. The Court cautioned lower courts against using the dormant Commerce Clause as a “‘roving license for federal courts to decide what activities are appropriate for state and local governments to undertake.’”

Here’s where the secretary’s evaluation is particularly important. The impact on federalism will depend on how vague terms— “onerous,” “excessive,” or “inconsistent”—are defined. Different definitions could have dramatically different results.

Under the order, for example, states with “onerous laws” could see federal funding for expanding broadband access eliminated. A broad brush seeking to eliminate virtually all state AI regulation could prevent the states from exercising powers that the Constitution reserves for them.

Here’s an example.

Technology currently complicates states’ longstanding efforts to protect children from access or exposure to sexually explicit material. Some states, like Texas, have passed laws requiring that online sexually explicit sites require visitors to verify their age.

In Free Speech Association v. Paxton, the Supreme Court found regulating technology in this way is “within a State’s authority to prevent children from accessing sexually explicit content” and, therefore, the law did not violate the First Amendment.

President Trump’s order does state a national policy framework should “ensure that children are protected, censorship is prevented, copyrights are respected and communities are safeguarded.”

There’s no reason why development of national AI policy must displace such efforts to protect children. The two goals are not mutually exclusive.

The president is correct in that “State-by-State regulation” has the potential to “create a patchwork of 50 different regulatory regives that makes compliance more challenging, particularly for start-ups.” As revoking Biden’s EO shows, he is clearly attuned laws banning “algorithmic discrimination.”

We believe a national policy framework is possible, even laudable, but it depends on the details, including the secretary’s evaluation of state laws and how the task force pursues litigation.

It also depends on what the order describes as “a legislative recommendation establishing a uniform Federal policy framework for AI that preempts State AI laws that conflict with the policy set forth” in the Executive Order.

Under the Constitution’s Supremacy Clause, federal law preempts state law where the two are clearly in conflict. The legislative recommendation mentioned in the order, therefore, can establish more clearly where national policy should take precedence over state laws.

The president’s preference for a national AI policy should be in sync with, the Constitution’s principle of federalism, rather than further impairing it.

The post Trump’s Executive Order on AI Might Be a Bridge Too Far appeared first on The Daily Signal.

Originally Published at Daily Wire, Daily Signal, or The Blaze

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0