Why religion will save us from automated warfare in the digital age

The technology now exists to render video games in real, playable time computationally — a first achieved with the classic pixelated first-person shooter Doom. Don’t yawn — this isn’t just a footnote in the annals of nerd history. Elon Musk promptly chimed in on the news in the replies to promise, “Tesla can do something similar with real world video.” We are now governed by people who seem hell-bent on preserving their power regardless of the cost — people who are also getting first dibs on the most powerful AIs in development. The military applications of this latest leap forward are obvious enough. A person at a terminal — or behind the wheel — enters a seamless virtual environment every bit as complex and challenging as a flesh-and-blood environment … at least as far as warfare goes. Yes, war has a funny way of simplifying or even minimizing our lived experience of our own environment: kill, stay alive, move forward, repeat. No wonder technological goals of modeling or simulating the given world work so well together with the arts and sciences of destruction. But another milestone in the computational march raises deeper questions about the automation of doom itself. Coinbase CEO Brian Armstrong announced that the company has “witnessed our first AI-to-AI crypto transaction.” “What did one AI buy from another? Tokens! Not crypto tokens, but AI tokens (words basically from one LLM to another). They used tokens to buy tokens,” he tweeted, adding a ???? emoji. “AI agents cannot get bank accounts, but they can get crypto wallets. They can now use USDC on Base to transact with humans, merchants, or other AIs. Those transactions are instant, global, and free. This,” he enthusiastically concluded, “is an important step to AIs getting useful work done.” In the fractured world of bleeding-edge tech, “doomerism” is associated with the fear that runaway computational advancement will automate a superintelligence that will destroy the human race. Perhaps oddly, less attention flows toward the much more prosaic likelihood that sustainable war can soon be carried out in a “set it and forget it” fashion — prompt the smart assistant to organize and execute a military campaign, let it handle all the payments and logistics, human or machine, and return to your fishing, hiking, literary criticism, whatever. Yes, there’s always the risk of tit-for-tat escalation unto planetary holocaust. But somehow, despite untold millions in wartime deaths and nuclear weapons aplenty, we’ve escaped that hellacious fate. Maybe we’re better off focusing on the obvious threats of regular ordinary world war in the digital age. But that would require a recognition that such a “thinkable” war is itself so bad that we must change our ways right now — instead of sitting around scaring ourselves to death with dark fantasies of humanity’s enslavement or obliteration. That would require recognizing that no matter how advanced we allow technology to become, the responsibility for what technology does will always rest with us. For that reason, the ultimate concern in the digital age is who we are responsible for and answerable to. As the etymology of the word responsible reveals (it comes from ancient terminology referring to the pouring out of libations in ritual sacrifice), this question of human responsibility points inescapably toward religious concepts, experiences, and traditions.Avoiding World War Autocomplete means accepting that religion is foundational to digital order — in ways we weren’t prepared for during the electric age typified by John Lennon’s “Imagine.” It means facing up to the fact that different civilizations with different religions are already well on their way to dealing in very different ways with the advent of supercomputers. And it means ensuring that those differences don’t result in one or several civilizations freaking out and starting a chain reaction of automated violence that engulfs the world — not unto the annihilation of the human race, but simply the devastation of billions of lives. Isn’t that enough? Unfortunately, right now, the strongest candidate for that civilizational freakout is the United States of America. Not only did we face the biggest shock in how digital tech has worked out, but we also have the farthest to fall in relative terms from our all-too-recent status as a global superpower. We are now governed by people who seem hell-bent on preserving their power regardless of the cost — people who are also getting first dibs on the most powerful AIs in development. Scary as automated conflict indeed is, the biggest threat to the many billions of humans — and multimillions of Americans — who would suffer most in a world war isn’t the machines. It’s the people who want most to control them.

The technology now exists to render video games in real, playable time computationally — a first achieved with the classic pixelated first-person shooter Doom.

Don’t yawn — this isn’t just a footnote in the annals of nerd history. Elon Musk promptly chimed in on the news in the replies to promise, “Tesla can do something similar with real world video.”

We are now governed by people who seem hell-bent on preserving their power regardless of the cost — people who are also getting first dibs on the most powerful AIs in development.

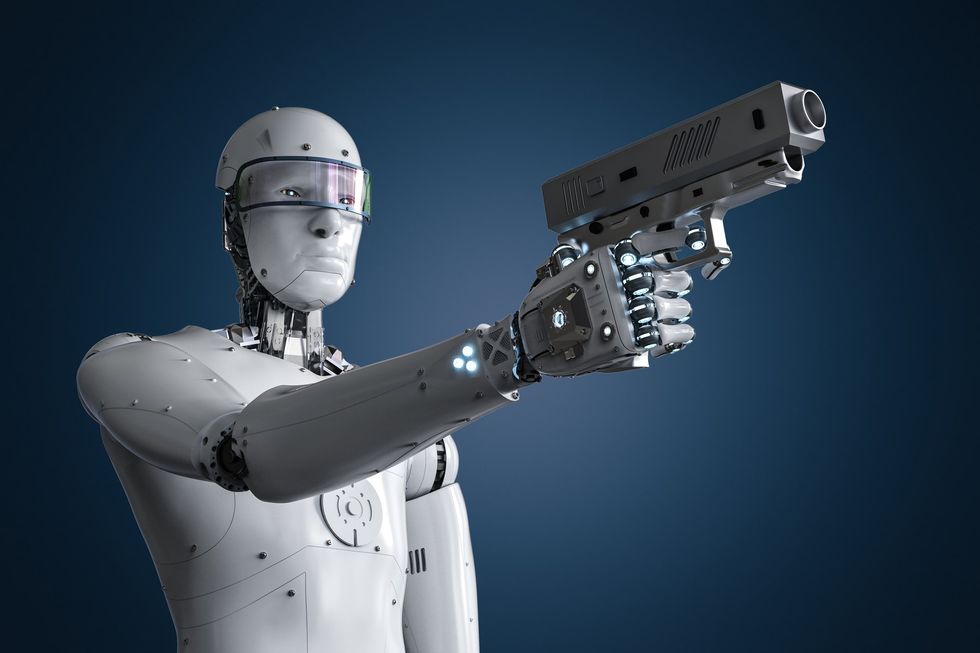

The military applications of this latest leap forward are obvious enough. A person at a terminal — or behind the wheel — enters a seamless virtual environment every bit as complex and challenging as a flesh-and-blood environment … at least as far as warfare goes. Yes, war has a funny way of simplifying or even minimizing our lived experience of our own environment: kill, stay alive, move forward, repeat. No wonder technological goals of modeling or simulating the given world work so well together with the arts and sciences of destruction.

But another milestone in the computational march raises deeper questions about the automation of doom itself. Coinbase CEO Brian Armstrong announced that the company has “witnessed our first AI-to-AI crypto transaction.”

“What did one AI buy from another? Tokens! Not crypto tokens, but AI tokens (words basically from one LLM to another). They used tokens to buy tokens,” he tweeted, adding a ???? emoji. “AI agents cannot get bank accounts, but they can get crypto wallets. They can now use USDC on Base to transact with humans, merchants, or other AIs. Those transactions are instant, global, and free. This,” he enthusiastically concluded, “is an important step to AIs getting useful work done.”

In the fractured world of bleeding-edge tech, “doomerism” is associated with the fear that runaway computational advancement will automate a superintelligence that will destroy the human race.

Perhaps oddly, less attention flows toward the much more prosaic likelihood that sustainable war can soon be carried out in a “set it and forget it” fashion — prompt the smart assistant to organize and execute a military campaign, let it handle all the payments and logistics, human or machine, and return to your fishing, hiking, literary criticism, whatever.

Yes, there’s always the risk of tit-for-tat escalation unto planetary holocaust. But somehow, despite untold millions in wartime deaths and nuclear weapons aplenty, we’ve escaped that hellacious fate.

Maybe we’re better off focusing on the obvious threats of regular ordinary world war in the digital age.

But that would require a recognition that such a “thinkable” war is itself so bad that we must change our ways right now — instead of sitting around scaring ourselves to death with dark fantasies of humanity’s enslavement or obliteration.

That would require recognizing that no matter how advanced we allow technology to become, the responsibility for what technology does will always rest with us. For that reason, the ultimate concern in the digital age is who we are responsible for and answerable to.

As the etymology of the word responsible reveals (it comes from ancient terminology referring to the pouring out of libations in ritual sacrifice), this question of human responsibility points inescapably toward religious concepts, experiences, and traditions.

Avoiding World War Autocomplete means accepting that religion is foundational to digital order — in ways we weren’t prepared for during the electric age typified by John Lennon’s “Imagine.” It means facing up to the fact that different civilizations with different religions are already well on their way to dealing in very different ways with the advent of supercomputers.

And it means ensuring that those differences don’t result in one or several civilizations freaking out and starting a chain reaction of automated violence that engulfs the world — not unto the annihilation of the human race, but simply the devastation of billions of lives. Isn’t that enough?

Unfortunately, right now, the strongest candidate for that civilizational freakout is the United States of America. Not only did we face the biggest shock in how digital tech has worked out, but we also have the farthest to fall in relative terms from our all-too-recent status as a global superpower. We are now governed by people who seem hell-bent on preserving their power regardless of the cost — people who are also getting first dibs on the most powerful AIs in development.

Scary as automated conflict indeed is, the biggest threat to the many billions of humans — and multimillions of Americans — who would suffer most in a world war isn’t the machines. It’s the people who want most to control them.

Originally Published at Daily Wire, World Net Daily, or The Blaze

What's Your Reaction?